Challenges of Web Scraping and How to Overcome Them

There are several aspects of web scraping you should be aware of. For instance, while you might have heard about its numerous benefits and are feeling excited to get started, it is also important that you learn the challenges so that you can know what you are getting into

Indeed, web scraping provides businesses with an opportunity that would be impossible otherwise – the ability to have a large dose of relevant user data at every point.

This data can be used to protect the brand, monitor the competition and market, observe and understand trends, analyze consumer sentiments, or even find leads.

Particularly in the automotive industry, data can be used to ride on top of what would otherwise have been marketing challenges such as digitization, globalization, and increased competition. It is recommended to see the new post from Oxylabs if you wish to dig deeper into how the automotive industry is affected by data scraping.

Without web scraping, collecting this data will be slow and ineffective. Yet, web scraping is a sea full of data and challenges that would stop at nothing to hamper the process.

What Are the Peculiarities and Features of Web Scraping?

Web scraping or web data extraction is getting data from the web. This is the simplest definition. However, the process involves everything from when the request is sent out to when the results are returned and stored with everything in-between.

It is now considered the most efficient solution for data collection. It includes using proper proxies, bots, and AI-based software to interact with multiple data sources and collect their information.

Another thing that makes it so efficient is that it is automated and saves time while increasing accuracy and productivity.

The Main Challenges of Web Scraping

It is only natural that this important process could come at a cost and be surrounded with challenges that would want to prevent it.

Changes in Website Structure

The first challenge to consider is something that is always so often. Websites often upgrade and modify their features and structures to align with technological advancement.

While this may sound like something awesome for the website, it can pose a significant challenge to web scraping.

For instance, when these changes take effect, scraping bots written to scrape the former structures would find it impossible to interact with or extract from the new and improved structure.

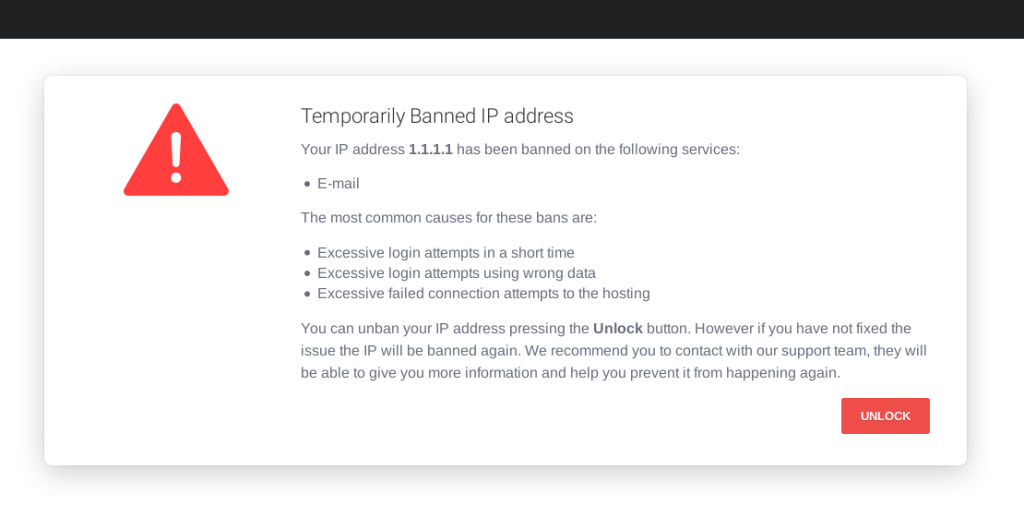

IP Blocking

This is also another very common challenge as it can happen to anybody and tends to end data extraction immediately it occurs.

When visiting a website, it is mandatory to disclose your IP to establish a connection. By implication, it is impossible to be on the internet without an IP.

Every device has one, and it enables the communication between your device and the web.

However, once an IP is recorded, it can be monitored closely and banned after performing repetitive tasks on a website.

This is a direct shot to web scraping and an automated repetitive process. The website automatically makes it impossible to scrape with that IP by blocking or banning an IP.

Other Anti-Scraping Technologies

Aside from IP blocks, websites use other technologies to limit or completely restrict web scraping.

For instance, on and CAPTCHA tests are widely used to prevent people from scraping certain websites.

Bot detection techniques are used to easily identify when a user is behaving like a bot. This could include observing how they navigate a website or how often they perform certain tasks and whether or not there is any pattern to their activities.

Once any of the above are observed to be unlike what a human would do, the user is classified as a bot and blocked, and the web scraping is brought to a halt.

Secondly, CAPTCHA tests are used to determine humans from bots. They contain simple tests that humans would easily pass and bots would find harder to deal with. The result is used to identify a bot and ban it immediately.

Geo-Restrictions

This is also another major problem for web scraping and involves using an IP to identify the actual location of a user.

Once the user is identified as browsing from a forbidden region, their access is denied partially or completely.

When this is done during web scraping, it can prevent car makers and sellers from certain parts of the world from having the same access to relevant data as their counterparts in other parts of the world.

How A Web Scraper Tool Can Serve As An Effective Solution To These Challenges

Luckily, bypassing the above challenges is as simple as using the right tools. An example of such a tool is a web scraper tool.

For instance, a web scraper tool combined with a proper proxy would conceal your IP and use different IPs instead; this prevents you from getting blocked or banned and even prevents geo-restrictions.

Additionally, if you move further to learn how to use an auto web scraper that is AI-based, the tool can behave like a human, bypassing any anti-scraping technique.

It can also learn and adapt to different structural changes to prevent crashing when it meets a new or dynamic website.

Conclusion

Web scraping is a process maddened by many challenges that some users find impossible, but learning how to use an auto web scraper and other AI-based tools can help you easily overcome these challenges, especially in the automotive industry.